Faster Algorithms With Preprocessing

When I’m trying to solve problems by writing algorithms, I often run into situations where my algorithm is correct, but is far too slow for the problem I’m trying to solve. Sometimes rethinking the algorithm is simple and easy, but in other cases no obvious alternatives present themselves.

In these cases, I find that it’s usually easier to use a preprocessing step to shrink the problem space, which includes all the possible solutions to the problem. This often offers an equivalent speedup for a lot less work.

Most often when my algorithms are too slow, that’s because they’re dealing with large amounts of data and trying to select the best solution or all solutions that satisfy some constraints. I’ll call these filtering algorithms because I don’t know of a better phrase, and selection algorithm is already taken by a different concept. In these algorithms, there are a set of constraints that need to be satisfied before a solution can be acceptable. One way of shrinking the problem space is by ordering the constraint checks such that as much data is eliminated as soon and as efficiently as possible, so that the later more expensive steps don’t need to be run as often. The preprocessing steps that I’ll be talking about generally involve adding a simple constraint check to remove unacceptable solutions early.

Mathematical Justification

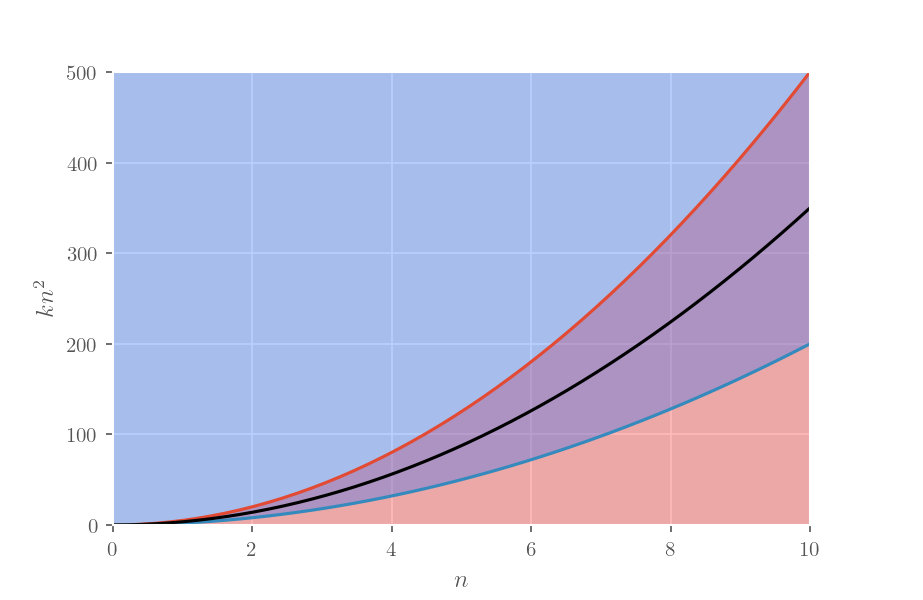

Anyone that’s done much analysis of algorithms has probably come across Big O Notation for describing how an algorithm performs. Wikipedia has a pretty good article on the subject. The general idea is that, given a number of elements $n$, the algorithm will take a different number of steps that varies by $n$. What constitutes a step will vary based on the algorithm, but it generally is an operation that takes a constant amount of time.

For example, bubble sort is an $O(n^2)$ algorithm, which means that for every $n$ items, it takes $n^2$ steps; if it is given 5 elements, then it will take 25 steps. (There are variations that don’t take exactly 25 steps, but they are still $O(n)$ for… complicated mathematical reasons.) Another algorithm, quicksort, runs in $O(n\log{}n)$ time, so it would only take ~12 steps to complete.

One thing to note is that the notation does not take the amount of processing power into account. In practice, bubble sort can actually outperform quicksort on small data sets. Big O notation is more useful when determining how the performance of algorithms change over time as the number of elements grows; in other words, it determines the scalability of the algorithm.

So how does a pre-processing step fit into all this? In practice, the value of $n$ will often stay nearly constant over the use of the algorithm. In these cases, if performance is the issue rather than scalability, then shrinking $n$ is often enough to solve the problem at hand.

An Example

About a year ago, as a learning experiment, I wrote an anagram finder in Go. You can find the full code here. It’s not a particularly spectacular algorithm, but it’s pretty simple. The basic steps are:

- Load a list of words from a file (the “dictionary”).

- Generate “keys” for each word by sorting the letters alphabetically.

- Take the given phrase and remove all the words in the dictionary that cannot possibly be found in that phrase.

- For each word in the dictionary:

- Try to subtract the word from the phrase. If not all the letters in the word are contained in the phrase, then skip this word.

- Determine if the are more letters contained in the phrase. If not, you’ve found an anagram.

- If there are more letters, repeat the process with the new phrase created by subtracting the key from the old phrase.

- Remove duplicates from the found anagrams.

- Print all the remaining anagrams.

That looks like a lot, but by algorithmic standards it’s pretty simple. My best guess for the algorithmic complexity of this is $O(lmn)$, where $l$ is the number of words in the dictionary, $m$ is the average word length in the dictionary, and $n$ is the length of the phrase. It’s not particularly good.

The important part is step 3, which removes any words that cannot be found in the phrase from the dictionary by detecting whether all the letters in that word are contained in the phrase. It’s a very simple step that doesn’t take a whole lot of time, but it significantly cuts down the problem space.

One thing to note is that there are still plenty of false positives: there may be words in the resulting dictionary that are not included in any of the generated anagrams. Pre-processing steps cannot have any false negatives though, because that might erroneously eliminate usable (and possibly even optimal) solutions.

I showed this program to a friend, and he was surprised by how fast it was given how naive my algorithm was. Looking through the code, he pointed out that particular function. We tried taking out that step and measuring the performance penalty.

50 times. A simple preprocessing step made my algorithm 50 times faster.

Of course this won’t work if you are finding anagrams of long phrases or are using immense dictionaries. At that point, switching to a more efficient algorithm is likely in order. But this is a quick way of getting a large speedup for not a lot of effort, and it will also serve you well if and when you do elect to use a more scalable algorithm.